Therefore, it isn’t capable of imitating the XOR function. From the below truth table it can be inferred that XOR produces an output for different states of inputs and for the same inputs the XOR logic does not produce any output. The Output of XOR logic is yielded by the equation as shown below. Sentiment classification using machine learning techniques Proceedings of the ACL-02 Conference on Empirical Methods in Natural Language Processing, 10, 79–86. We can leverage their capabilities for binary operations to perform multiplication using perceptrons.

If you want to read another explanation on why a stack of linear layers is still linear, please access this Google’s Machine Learning Crash Course page. Let’s bring everything together by creating an MLP class. The plot function is exactly the same as the one in the Perceptron class. In the forward pass, we apply the wX + b relation multiple times, and applying a sigmoid function after each call. Its derivate its also implemented through the _delsigmoid function.

A single perceptron, therefore, cannot separate our XOR gate because it can only draw one straight line. To speed things up with the beauty of computer science – when we run this iteration 10,000 times, it gives us an output of about $.9999$. This is very close to our expected value of 1, and demonstrates that the network has learned what the correct output should be. The basic idea is to take the input, multiply it by the synaptic weight, and check if the output is correct. If it is not, adjust the weight, multiply it by the input again, check the output and repeat, until we have reached an ideal synaptic weight. It abruptely falls towards a small value and over epochs it slowly decreases.

Multi-Layer Perceptron using Python

The XOR problem with neural networks can be solved by using Multi-Layer Perceptrons or a neural network architecture with an input layer, hidden layer, and output layer. So during the forward propagation through the neural networks, the weights get updated to the corresponding layers and the XOR logic gets executed. The Neural network architecture to solve the XOR problem will be as shown below. In the above figure, we can see that above the linear separable line the red triangle is overlapping with the pink dot and linear separability of data points is not possible using the XOR logic.

I hope that the mathematical explanation of neural network along with its coding in Python will help other readers understand the working of a neural network. Following code gist shows the initialization of parameters for neural network. This meant that neural networks couldn’t be used for a lot of the problems that required complex network architecture. Where y_output is now our estimation of the function from the neural network. A neural network is essentially a series of hyperplanes (a plane in N dimensions) that group / separate regions in the target hyperplane. This tutorial is very heavy on the math and theory, but it’s very important that you understand it before we move on to the coding, so that you have the fundamentals down.

Significance of XOR in Neural Network

That would lead to something called overfitting in most cases. The perceptron model and logistic regression choices depend on the problem and dataset. Logistic regression is more reliable and can deal with a broader range of problems because it is based on probabilities and can model non-linear decision boundaries.

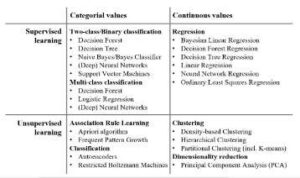

The perceptron model has been able to solve problems with clear decision lines, but it needs help with tasks that need clear decision lines. The introduction of multi-layer perceptrons (MLPs), consisting of multiple layers of perceptron-like units, marked a significant advancement in artificial neural networks [5]. MLPs can approximate any continuous function, given a sufficient number of hidden layers and neurons [23]. By employing the backpropagation algorithm, MLPs can be trained to solve more complex tasks, such as the XOR problem, which is not solvable by a single perceptron.

Attempt #1: The Single Layer Perceptron

On the contrary, the function drawn to the right of the ReLU function is linear. Applying multiple linear activation functions will still make the network linear. Out of all the 2 input logic gates, the XOR and XNOR gates are the only ones that are not linearly-separable. You’ll notice that the training loop never terminates, since a perceptron can only converge on linearly separable data.

Rosenblatt proved the perceptron convergence theorem in 1960. It says that if a dataset can be separated linearly, the perceptron learning algorithm will find a solution in a finite number of steps [8]. The theorem says that, given enough time, the perceptron model will find the best weights and biases to classify all data points in a linearly separable dataset. The information of a neural network is stored in the interconnections between the neurons i.e. the weights. A neural network learns by updating its weights according to a learning algorithm that helps it converge to the expected output. The learning algorithm is a principled way of changing the weights and biases based on the loss function.

Warren McCulloch and Walter Pitts’ work on artificial neurons in 1943 [1] inspired a psychologist named Frank Rosenblatt to make the perceptron model in 1957 [2]. Rosenblatt’s perceptron was the first neural network (NN) to be described with an algorithm, paving the way for modern techniques for machine learning (ML). Upon its discovery, the perceptron got much attention from scientists and the general public. Some saw this new technology as essential for intelligent machines—a model for learning and changing [3].

“Activation Function” is a function that generates an output to the neuron, based on its inputs. Although there are several activation functions, I’ll focus on only one to explain what they do. Let’s meet the ReLU (Rectified Linear Unit) activation function. It doesn’t matter how many linear layers we stack, they’ll always be matrix in the end.

At its core, the perceptron model is a linear classifier. It aims to find a “hyperplane” (a line in two-dimensional space, a plane in three-dimensional space, or a higher-dimensional analog) separating two data classes. For a dataset to be linearly separable, a hyperplane must correctly sort all data points [6].

But in other cases, the output could be a probability, a number greater than 1, or anything else. Normalizing in this way uses something called an activation function, of which there are many. Talking about the weights of the overall network, from the above and part 1 content we have deduced the weights for the system to act as an AND gate and as a NOR gate. We will be using those weights for the implementation of the XOR gate. For layer 1, 3 of the total 6 weights would be the same as that of the NOR gate and the remaining 3 would be the same as that of the AND gate.

It makes linear classification and learning from data easy to understand. As I said, there are many different kinds of activation functions – tanh, relu, binary step – all of which have their own respective uses and qualities. For this example, we’ll be using what’s called the logistic sigmoid function. M maps the internal representation to the output scalar. Backpropagation is an algorithm for update the weights and biases of a model based on their gradients with respect to the error function, starting from the output layer all the way to the first layer.

- There are several workarounds for this problem which largely fall into architecture (e.g. ReLu) or algorithmic adjustments (e.g. greedy layer training).

- We will delve into its history, how it operates, and how it compares to other models.

- However, it doesn’t ever touch 0 or 1, which is important to remember.

- From the diagram, the OR gate is 0 only if both inputs are 0.

- As we know that for XOR inputs 1,0 and 0,1 will give output 1 and inputs 1,1 and 0,0 will output 0.

One big problem with the perceptron model is that it can’t deal with data that doesn’t separate in a straight line. The XOR problem is an example of how some datasets are impossible to divide by a single hyperplane, which prevents the perceptron from finding a solution [4]. As previously mentioned, the perceptron model is a linear classifier. xor neural network It makes a decision boundary, a feature-space line separating the two classes [6]. When a new data point is added, the perceptron model sorts it based on where it falls on the decision boundary. The perceptron is fast and easy to use because it is simple, but it can only solve problems with data that can be separated linearly.

In the next tutorial, we’ll put it into action by making our XOR neural network in Python. Our starting inputs are $0,0$, and we to multiply them by weights that will give us our output, $0$. However, any number multiplied by 0 will give us 0, so let’s move on to the second input $0,1 \mapsto 1$. Like I said earlier, the random synaptic weight will most likely not give us the correct output the first try. So we need a way to adjust the synpatic weights until it starts producing accurate outputs and “learns” the trend.

However, these are much simpler, in both design and in function, and nowhere near as powerful as the real kind. While fundamental, more sophisticated deep learning techniques have primarily eclipsed the perceptron model. But it is still valuable for machine learning because it is a simple but effective way to teach the basics of neural networks and get ideas for making more complicated models. As deep learning keeps improving, the perceptron model’s core ideas and principles will likely stay the same and influence the design of new architectures and algorithms. This completes a single forward pass, where our predicted_output needs to be compared with the expected_output. Based on this comparison, the weights for both the hidden layers and the output layers are changed using backpropagation.

- In the same way, RNNs build on the perceptron model by adding recurrent connections.

- This data is the same for each kind of logic gate, since they all take in two boolean variables as input.

- As we move downwards the line, the classification (a real number) increases.

In the same way, RNNs build on the perceptron model by adding recurrent connections. This lets the network learn temporal dependencies in sequential data [25]. Transistors are the basic building blocks of electronic devices.

The perceptron model laid the foundation for deep learning, a subfield of machine learning focused on neural networks with multiple layers (deep neural networks). Perceptrons can also be used for music genre classification, which involves identifying the genre of a given audio track. A perceptron model can be trained to classify audio into already-set https://forexhero.info/ genres [20]. This is done by taking relevant parts of audio signals, such as spectral or temporal features, and putting them together. The outputs generated by the XOR logic are not linearly separable in the hyperplane. So In this article let us see what is the XOR logic and how to integrate the XOR logic using neural networks.